Synthesizing time-varying BRDFs via latent space

Synthesizing time-varying BRDFs

Synthesizing time-varying BRDFsAbstract

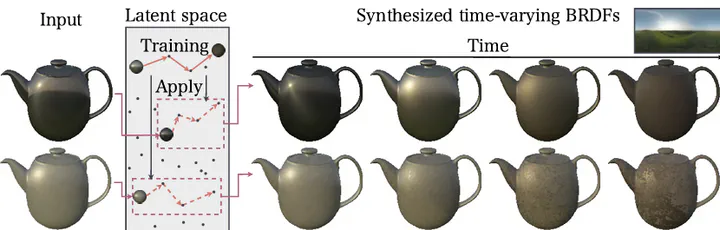

This paper introduces a method for synthesizing time-varying bidirectional reflectance distribution functions (BRDFs) by applying learned temporal changes to static BRDFs. Achieving realistic and natural changes in material appearance over time is crucial in computer graphics and virtual reality. Existing methods employ a parametric BRDF model, and the temporal changes in BRDFs are modeled by polynomial functions that represent the transitions of the BRDF parameters. However, the limited representational capabilities of both the parametric BRDF model and the polynomial temporal model restrict the fidelity of the appearance reproduction. In this paper, to overcome this limitation, we introduce a neural embedding for BRDFs and propose a neural temporal model that represents the temporal changes of BRDFs in the latent space, which allows flexible representations of BRDFs and temporal changes. The experiments using synthetic and real-world datasets demonstrate that the flexibility of the proposed approach achieves a faithful synthesis of temporal changes in material appearance.

Type

Publication

In European Conference on Computer Vision (ECCV 2024)