Instance-wise distribution control of text-to-image diffusion models

Oct 31, 2025·,, ·

0 min read

·

0 min read

Weng Ian Chan

Hiroaki Santo

Yasuyuki Matsushita

Fumio Okura

Abstract

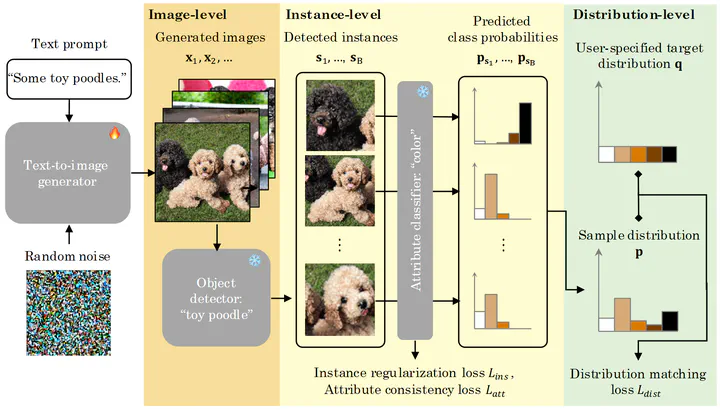

Text-to-image diffusion models are increasingly used to generate synthetic datasets for downstream vision tasks. However, they often inherit biases from large-scale training data, which can result in unbalanced attribute distributions in the generated images. While prior efforts have attempted to mitigate these biases, most focus on single-object images and struggle to control attributes across object instances in multi-instance generations. To address this limitation, we propose an instance-wise control of the attribute distribution by fine-tuning diffusion models with guidance from a pre-trained object detector and an attribute classifier. Our approach aligns the attribute distribution over object instances in generated images with a user-defined distribution, which enables precise control over attribute proportions at the instance level. Experiments across various objects and attributes demonstrate that our method generates high-quality, multi-instance images that match the specified distribution, supporting the scalable creation of distribution-aware synthetic datasets for in-the-wild vision tasks.

Type

Publication

Pattern Recognition